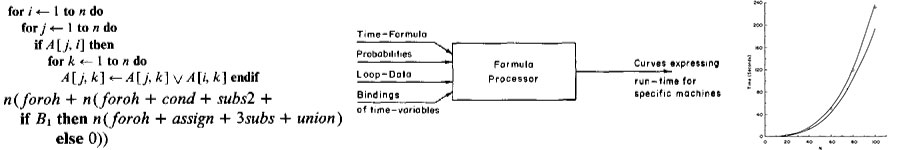

The interest in the analysis of algorithms, [Knu 68] and the availability of a full-fledged syntax-directed compiler-compiler, [CC 75]prompted me to develop a tool that was capable of reading programs written in Pascal-like languages and to (attempt) to generate a symbolic formula — called a time-formula – whose variables – called time-variables –express the time to perform individual operations such as addition, multiplication, subscripting, and loop-overhead [Coh 82].

Once a time-formula is generated, one can resort to a symbolic manipulation package to evaluate the actual time of execution of a program as a function of variables denoting the number of times that loops are executed. In the case of simple programs such as matrix multiplication, our system was able to generate a cubic equation expressing — in much greater detail than the ubiquitous BigO notation — the time of execution of a program as a function of n, the size of the matrix, and the time-variables. The symbolic value of n is obtained through an interactive dialogue with the formula generator where the user can state symbolically the number of times that each of a program’s loop is executed.

By binding the time-variables to their values (say, in micro-seconds), one can plot the execution times of a program as a function of the variables expressing the number of times each loop was performed. This of course works perfectly well for programs not containing conditionals and whose loops are known to terminate.

In the case of conditionals, an approximation had to be introduced. The program would generate a sub-formula of the type:

……………………(probability

………………………..(sub-time-formula for the then-branch)

………………………..(sub-time-formula for the else-branch))

The time-formula evaluator then proceeds accordingly, by evaluating the relative probabilities for executing the then and else subcomponents. This is, of course, an approximation. The presence of assignments and loops in the program being analyzed actually makes the value of the variable probability a function of time, and not a constant as it is assumed. Fundamentally, we know that the undecidability results of Turing prevent us from being able to evaluate the execution time of general programs. Nevertheless, our tool turns out to be very useful in determining execution times of various programs running in different computers. Micro-analysis, as I propose it, is not dissimilar to the efforts of Knuth to determine execution times of programs written in his suggested assembly language (MIX). Instead, our tool determines the execution times of programs written in high-level language with the help of their users.

In 1975, Ben Wegbreit accomplished a next step in automatic program analysis [Weg 75]. He demonstrated how to generate symbolic finite-difference equations by having his tool examine loops that were performed recursively, as in Lisp. Assuming that a program does not contain assignments, Wegbreit’s tool is capable of automatically analyzing small Lisp programs. As in the case of our tool, conditionals are handled by assuming that probability values remained unchanged during program execution.

Lyle Ramshaw, a doctoral student working under Donald Knuth at Stanford, carried out what I consider a final push to attempt to determine automatically the execution time of programs [Ram 79]. Ramshaw introduced a “chromatic plumbing” metaphor to mimic the execution of a program expressed as a flowchart. One assumes that pipes connect the various boxes of a flowchart and the boxes themselves are intermediate containers. There is an initial box representing the program’s start and another the program’s end.

One visualizes program execution by the path of a colored pellet traveling through the plumbing network starting from its initial box. The color of the pellet represents the vector of the program’s variables. As an assignment box is performed, the pellet changes color. A pellet going through a conditional box follows the yes or no paths according to the value of its color. If the program terminates, its color in the final box describes the values of the program’s variables. Obviously, the numbers of times loops are executed depend on the various shades of color attained in a pellet’s trajectory.

Ramshaw’s approach, as ours, consists of two phases: the determination of a time-formula and its evaluation. He shows that one can always generate a system of finite-difference equations expressing the time of execution of a program. Informally, those equations are based on the successive colors attained by a pellet as it travels in the plumbing network. His approach is related to that used in program correctness and in the determination of loop invariants.

The solution of the generated equations belongs to the realm of mathematics—they may or may not be solvable. Interestingly, Ramshaw did not implement his approach and used a few small programs to illustrate it. In 1984, a colleague and I succeeded in implementing a workable version of Ramshaw’s approach and applied it to some non-trivial programs [HC 88]. Unfortunately, it is formally enticing but practically ineffectual. For example, it is exceedingly difficult to consider programs containing arrays. My claim is that the simple approach I have taken in [CZ 74], coupled with the determination of simpler finite difference equations expressing the number of loop iterations [CK 77a], [CK 77b] may yield valuable results, especially when comparing different programs to accomplish equivalent tasks.

My students and I have successfully analyzed non-trivial programs using the (simplified) microanalysis approach. To obtain valuable results, the analysis has to be user-aided. Some of the references below indicate the papers in microanalysis that have been published in various topics:

- Parsing [CR 78]

- Matrix Multiplication using Strassen’s method [CR 76]

- Memory Management and Garbage Collection [CN 83]

- Text Processing [CW 92]

I also have done research in three ancillary research topics relating to automatic program analyses:

The first consisted in symbolic solving systems of finite-difference equations that could express the number of times program loops are executed. I believe I was among the first to attempt to solve such equations [CK 77a], [CK 77b]. This work is closely related to symbolic summation that in turn relates to integration. I recall that in the year following this research I worked during a sabbatical at MIT with Joel Moses — the developer of Macsyma — on symbolic summation. Shortly after that association, Karr and Gosper published the definitive work on this topic. [Gos 78], [Kar 81].

The second ancillary problem relates to the determination of volumes of multi-dimensional polyhedra [CH 79]. That work emerged in the following context: consider a straight-line program (i.e., loop-less) in which all the conditionals are expressed by systems of linear inequations. Then, the probability of executing the conditional is related to the volume of the polyhedron representing the inequations. This assumes that all variables have known upper and lower bounds, which is the case for most program variables. I believe I am among the first researchers, if not the first, to consider this very problem and to provide solutions to it. The paper was my first published in the Journal of the ACM and had an undergraduate (Timothy Hickey) as a co-author. In retrospect, this problem also relates to constraint logic programming, an area I became interested in later on.

The third problem relates to automatic program analysis in the counting of strings of a given fixed length generated by context-free grammars (CFG). That problem arises when attempting to determine the average case complexity of parsers for languages generated by those grammars. As an example, consider determining how many arithmetic expressions of length 10 are generated by a grammar specifying those expressions. Furthermore, we would like to know how many of those expressions contain one plus sign, two plus signs, etc. That information is needed to estimate the average case complexity of a parser. I was among the first to consider that problem, which evolved from the work on analysis of deterministic parsers [CR 78]. Marty Roth, the undergraduate who was my author in that paper, has the merit of discovering the relationship between counting the number of a given terminal in strings of certain length and the number of steps needed to parse that string. I first worked on a similar, but easier, problem of determining the number of strings of a given length in finite-state grammars [CK 77a], [CK 77b]. That amounted to generating finite difference equations that could be solved for determining the desired number of strings. The same occurs in the case of CF grammars [CH 83]. Later on, Philippe Flajolet and his colleagues generalized the solution for other types of finite-difference equations generated by programs and useful in average-case analyses [FSZ 91].

In the late eighties, my students and I considered the case of microanalysis of parallel programs. A description of my involvement with parallelism appears in [HCHP 92].

References

[FSZ 91] P. Flajolet, B. Salvy, P. Zimmermann, Automatic Average-Case Analysis of Algorithms, Theoretical Computer Science, 79(1), 37-109, 1991.

[Gos 78] R. W. Gosper, Decision Procedure for Indefinite Hypergeometric Summation, Proceedings National. Academy of Sciences, USA, 75, 40-42, 1978.

[Kar 81] M. Karr, Summation in Finite Terms, Journal of the ACM, 28(2), 305-350, 1981.

[Knu 68] D. Knuth, The Art of Computer Programming, Volume 1: Fundamental Algorithms, First Edition, Addison Wesley, 1968

[Ram 79] L. Ramshaw, Formalizing the Analysis of Algorithms, Ph.D. Thesis, Stanford University, 1979. (Also available as Report SL-79-5, Xerox Palo Alto Research Center, Palo Alto, California, 1979).

[Weg 75] B. Wegbreit, Mechanical Program Analysis, Communications of the ACM, 18(9), 528-538, 1975.