Having had substantial experience with sequential algorithms for parsing and garbage collection, I was naturally attracted to the problems of how to perform those tasks in parallel. This interest developed in the mid-eighties, when parallel machines were not yet fully accessible.

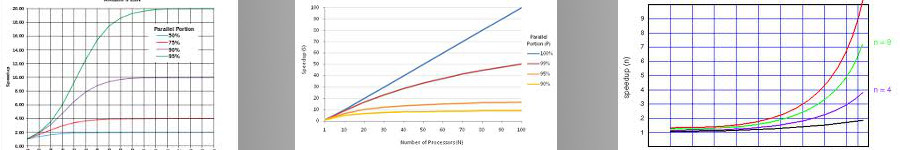

A paper I co-authored with two undergraduates in [CHK 82] describes my involvement with parallelism in parsing. We considered how multiple processors could be placed in adjacent and equal segments of the string being parsed and proceed with the parsing without resorting to the information available from its immediate left processor. This rationale was based on the ability of a processor to independently parse a sequence of tokens; for example one representing a while-loop whose entire body was in the segment to which it had been assigned. If that occurred, no interruptions or interactions with a left processor would be needed. The goal of the paper was to estimate the possible speed-ups when several processors were assigned to parse a long string.

In a best possible scenario, the processors could work with no interruptions for a fairly long time and then merge their information on the left to continue the parsing. In an extreme, unfavorable case with many processors, there would be several that could not accomplish their task without resorting to communication with their left neighbors. This model is theoretical in nature and related to program analysis.

A few years later, I co-authored a paper, again with an undergraduate, in which an actual simulation was undertaken to determine possible speed-ups when parsing programs in an actual programming language [CK 85].

The research in parallel garbage collection (GC) was also conducted with an undergraduate co-author [CH 84]. It was based on Dijkstra’s approach for blending actual computation with collecting (i.e., recognizing discarded memory cells). The work provides estimates of efficiencies that can be attained for various ratios of memory availability and computational resources. It considered regions of the space of possible ranges of the variables for which efficiencies could vary markedly. In retrospect, I now believe that constraint logic programming (CLP) can be used to specify the boundaries of those regions.

My involvement with actual parallel computers began in the early 1990s, when I was the Principal Investigator in a NSF CISE grant awarded to Brandeis; that grant provided significant funds for purchasing both SIMD and MIMD machines.

SIMD machines, even though they have become less popular in recent years, remain an intellectual challenge to program. In the mid 1990s, another undergraduate helped me in generalizing the work of Vishkin [Vis 85] on the parsing of matching parenthesis. We were able to take CFG and perform the parsing on a Maspar computer using variants of the matching parenthesis technique.

Three of my doctoral students combined different areas in computer science with research on parallelism:

- Shyam Mudambi did his dissertation on parallel logic Programming implementations using MIMD’s [Mud 92].

- Aline Yurik, developed software tools for analyzing parallel programs [Yur 94].

- Suresh Kalathur determined speed-ups in interval constraint logic programming in both MIMD and SIMD architectures [Kal 99].

References

[Vis 85] U. Vishkin, Optimal Parallel Pattern Matching in Strings, Information and Computation, 67, 91-113, 1985.