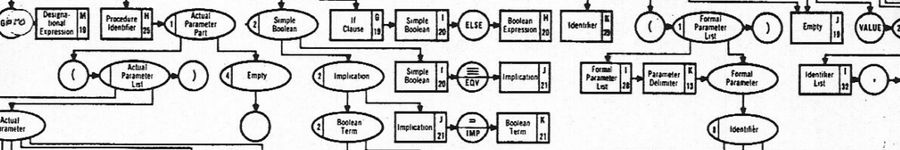

Algol 60 Syntactic Chart

M

y involvement with parsing began as soon as I arrived in Grenoble in the early sixties. At that time, Burroughs had published a graphic syntactic chart of Algol that was very handy to use [TTW 61]. Essentially, that chart displayed Backus Naur Form (BNF) as a 2D map: Every appearance of a non-terminal (metavariable) in the right hand side of a grammar rule contained an indicator of the 2D coordinates where that non-terminal is defined. This visualization was unusually influential. We had copies of it posted in our offices and got to know it almost by heart.

It was by that time that Brooker and Morris developed their recursive descent syntax-directed parsers [BMR 67] in England. I recall reading about another recursive method proposed by E. T. Irons, who was then a doctoral student at Princeton [Iro 61]. The Brooker-Morris approach was top-down whereas Iron’s was bottom-up. I remember implementing both algorithms, but it is important to understand that recursion in the sixties had not yet reached the widespread usage that it now has. Few compilers were able to handle recursion correctly mainly because the parameters were called in a variety of forms (e.g., value, reference, name) and it was not semantically clear how those parameters should be passed.

During the sixties, there was an articulated interest in Chomsky’s papers on grammars and hierarchies. S. Ginsburg had written a text on formal grammars and languages [Gin 66], but in that work there were no hints as how to apply the theory to practical algorithms that we all sensed were applicable to compilers. There were a number of influential papers that attempted to do precisely that and those papers had a considerable impact on my view of the field of parsing and compiling. Among them I recall the following:

- R. Floyd succeeded to explain the empirical work of Dijkstra on operator precedence parsing and its relationship with restricted types of context-free grammars [Flo 63].

- E. Irons illustrated how to use ambiguous grammars in error detection [Iro 63].

- D. Knuth proposed an interesting deterministic algorithm for bottom-up parsing by looking ahead to a fixed number of input characters [Knu 65]. Knuth himself thought that the proposed algorithm would not be practical but was contradicted a few years later when researchers like deRemer proved otherwise [DeR 71].

- N. Wirth and H. Weber were able to generalize Floyd’s precedence algorithm [WW 66], and subsequently, J. Ichbiah and S. Morse improved precedence parsing to its present form [IM 70].

- T. Griffiths and S. Petrick published a key paper on the relative efficiency of bottom-up and top-down parsers. Their approach consisted of simulating parsers using a two-stack Turing machines, simulating their behavior and establishing empirical estimates for efficiency [GP 65].

The aforementioned papers certainly influenced my views on syntax-directed compilers. They also had a considerable impact on the work of my Grenoble colleague, Alain Colmerauer, who was able to generalize Floyd’s work on precedence grammars by introducing two-stack parsers instead of one [Col 70]. His work covered both deterministic and non-deterministic parsers and was, perhaps, inspirational to his subsequent key role in developing Prolog.

My own interest during my time in Grenoble became the development of languages for compiler writing, which ultimately became the subject of my second doctoral dissertation [Coh 67a]. This quest explored almost all the ingredients for implementing packages like YACC and LEX. However, that project would have been too ambitious at that time; computers lacked the necessary memory and speed and the available programming languages were relatively primitive as compared to those that became available in the seventies.

Nevertheless, I have been among the first researchers to propose higher-level languages for writing compilers. In fact, with the help of Monique Brasseur, a colleague from Grenoble, I wrote a report in which we used Algol 60 to implement a syntax-directed interpreter for Algol 60. In that work, we used the stack machine of Randell and Russell [RR 64]to carry out the interpretation of the compiled program, pretty much in the spirit of what Java accomplishes nowadays.

It was N. Wirth who later carried out complete and beautiful implementations of Pascal using Pascal itself. It is interesting to note that Wirth started using bottom-up precedence parsers and then switched to recursive descent parsers. This is probably a result of his philosophy that one should be able to provide a complete and readable implementation of the language using the language itself, as Lisp implementers have been doing since that language inception.

It was only in the mid-seventies that I succeeded in implementing a full syntax-direct compiler generator with the help of some of my very bright Brandeis undergraduate students. To date, that has probably been the largest software implementation project that I have ever supervised. Brandeis had just purchased and made available to students a PDP-10. Since portability was extremely desirable at the time, we had the chutzpah to use Fortran as the implementation language. We had the help of Jean Ichbiah in providing the details of his weak-precedence parsers. Lexical scanners were also generated upon user specification of finite state tables.

The project was a success because of its originality — being among the first compiler generators — as well as the fact that it was the result of successful collaboration among highly motivated students implementing a complex software package. Of course, the package lacked the professional veneer of an implementation like YACC and LEX, which were written by the research group at Bell Labs a few years later. A publication describing my experience in developing the Brandeis’ compiler generator appeared in Software – Practice and Experience [CC 75].

My subsequent work in parsing and compiling occurred with the assistance of talented Brandeis undergraduates, who helped me to co-author of a number of papers on the subject. Among them, I mention with pride the ACM Communications paper with Marty Roth, which I consider to be the first serious analysis of deterministic parsing algorithms [CR 78]. Marty made the clever observation that to specify the number of rule usages in deterministic parsers, it was sufficient to count the number of certain terminals in the input string that also appeared in each grammar rule. By using a variant of Kirchhoff’s law, one can determine the number of times each rule is used by a parser as a function of the number of certain terminals appearing in an input string. For example, in the case of a grammar with rules for specifying arithmetic expressions, a count of the number of +’s, *’s, left and write parentheses, identifiers, etc. that appear in an input string can reveal the number of times each of the grammar rules is used.

An important consideration for performing an average case analysis of deterministic parsing algorithms is to be able to determine the number of strings of a fixed length n, generated by a given Chomsky type grammar. In a paper with Joel Katcoff, we illustrated how that number could be determined (even symbolically) in the case of finite-state grammars [CK 77a, CK 77b]. In a paper with Timothy Hickey, we demonstrated that one could determine the number of strings of length n in the case of context-free languages [CH 83]. A key to both papers is the generation of finite-difference equations that specify the number of strings of a given length.

Co-authored with Robin Sitver, our paper showed how one could use program transformation rules to demonstrate the equivalence of a precedence parser (a la Floyd) to Dijkstra’s empirical parser using operator’s weights [CS 79]—a work that is related to parser optimization.

Neal Carpenter and I developed a language for inquiring about the execution configuration of programs written in a high-level language [CC 77]. Basically, we implemented an interpreter for a high-level computer language like Pascal in which the user could specify labels (identifiers) that preceded any command. During the execution of a program, records were kept of the values of variables before and after passing through a given label. After execution, one could inquire about the state of the program variables by specifying a regular expression on the labels, establishing a given state of the execution (e.g., after passing any number of times through a given loop followed by the execution of a false branch of a conditional).

In a paper written with Eileen Carter, we developed a variant of Fortran in which one could specify non-deterministic primitives like choice, success, and failure [CC 74]. That work was inspired by Floyd’s paper on non-deterministic algorithms [Flo 67].

In the early eighties, after my involvement in Logic Programming, I challenged myself to describe in Prolog, most of the algorithms used in the “Dragon Book”, a well-known text on compilers written by Aho, Sethi and Ullman [ASU 86]. Alain Colmerauer and David H. Warren had already shown the advantages of using Prolog in certain aspects of parsing and compiling [Col 78], [War 80], but I wanted to show that many other approaches were possible. A paper describing that effort was co-authored with Timothy Hickey [CH 87].

References:

[ASU 86] A. Aho, R. Sethi, J. Ullman, Compilers: Principles, Techniques and Tools, Addison-Wesley, 1986.

[BMR 67] R. Brooker, D. Morris and J. Rohl, Experience with the Compiler Compiler, Computer Journal, 9(4), 345-349, 1967.

[Col 70] A. Colmerauer, Total Precedence Relations, Journal of the ACM, 17(1), 14-30, 1970.

[Col 78] Alain Colmerauer, Metamorphosis Grammars, Natural Language Communication with Computers, 133-189, 1978

[DeR 71] F. DeRemer, Simple LR(k) Grammars, Communications of the ACM, 14(7), 453-460, 1971.

[Flo 63] R. Floyd, Syntactic Analysis and Operator Precedence, Journal of the ACM, 10(3), 316-333, 1963.

[Flo 67] R. Floyd, Nondeterministic Algorithms, Journal of the ACM, 14(4), 636-644, 1967.

[Gin 66] S. Ginsburg, The Mathematical Theory of Context Free Languages, McGraw-Hill Book Company, New York, 1966.

[GP 65] T. Griffiths, S. Petrick, On the Relative Efficiencies of Context-Free Grammar, Communications ACM, 8(.), 289-300, 1965.

[IM 70] J. Ichbiah, S. Morse, A Technique for Generating Almost Optimal Floyd-Evans Productions for Precedence Grammars, Communications of the ACM, 13(8), 501-508, 1970.

[Iro 61] E. Irons, A Syntax Directed Compiler for ALGOL 60, Communications of the ACM, 4(1), 51-55, 1961.

[Iro 63] E. Irons, An Error-Correcting Parse Algorithm, Communications of the ACM, 6(11), 669-673, 1963.

[Knu 65] D. Knuth, On the Translation of Languages from Left to Right, Information and Control, 8(6), 607-639 1965.

[RR 64] B. Randell, L. Russel, Algol60 Implementation, Academic Press, New York, 1964.

[TTW 61] W. Taylor, L. Turner, R. Waychoff, A Syntactical Chart of ALGOL 60, Communications of the ACM, 4(9), 1961.

[War 80] D. Warren, Logic Programming and Compiler Writing, Software – Practice and Experience, 10(2), 97-125, 1980.

[WW 66] N. Wirth, H. Weber, EULER: A Generalization of Algol and its Formal Definition, Communications of the ACM, 9(1), 13-25, 1966.